Handling source system metadata changes automatically are pretty easy with BimlScript. There are several solutions available online check here, here, and here. Recently, a client brought up a scenario that put a kink in the usual solution. The client supported multiple tenants, and they wanted to segregate the tasks of handling the metadata changes from the processing the data changes.

This scenario makes sense from a security point of view; there’s just one catch: SSIS runs a SELECT statement against both the source and destination components in a data flow during the build process. In turn, this means Biml has the same requirement.

How do we get around this problem?

Find All the code for this solution can in my public Biml repository on GitHub.

Environment Setup

My development environment has a local instance of SQL Server with AdventureWorks2014 on it. I’m going to use that as my source. I also created a database on this instance called BimlExtract to serve as my destination database.

To create a user that can only read the schema on the source system, I created a login and user named ‘Biml’. I granted this user VIEW DEFINITION in AdventureWorks2014. I also added this user to the db_owner group in BimlExtract. Now, this user can read the schema of the source, and create tables in the destination. I’ve included the T-SQL to set the permissions in Database Setup.sql.

Now, we’re ready to walk through the solution.

01_Connections.biml

This script simply sets up an OLEDB and ADO.Net connection to the source and destination databases. I’m using the ‘Biml’ user set up before. The reason I set up both types is there are some objects I use that require ADO.Net connections, and others require OLEDB connections.

Notice that I intentionally set up my ADONET_PackageSource connection to refer to BimlExpress. That’s because, during build time, I need to treat BimlExpress as my Package source. In 05_Packages.biml, you’ll see how I use an expression that will allow us to change the database back to AdventureWorks2014 during run time.

02_Database.biml

I need Database nodes in my Biml solution before I can add any other database objects to my Biml solution.

03_Schemas.biml

I need Schema nodes too. In this case, I hard code the Extract schema. I don’t know how many schemas there will be in the source, so I discover this in this script. Using the GetDatabaseSchema() function, I use my OLEDB_AdventureWorks2014 connection to read the metadata into a Biml object. From there I spin through each schema discovered in the source and write that to a Schema node in my Biml Solution.

04_Tables.biml

Now, I want to discover all the tables (and views) in my source connection. Notice line 3 uses the same GetDatabaseSchema() function as in 03_Schemas.biml. In tables, I spin through the list of tables twice. The first time I’m recording the nodes that will reference the source tables.

The second time I spin through, notice I’m hard coding the SchemaName attribute to BimlExtract.Extract. I want to add nodes to represent the destination tables, I want all of those to land in the BimlExtract database, in the Extract schema.

..\Code\DevelopmentHelper.cs

Before we move on to 05_Packages.biml, I want to share a helper object I use in most of my Biml solutions. This object requires a connection string on instantiation. Once instantiated, you can deploy database objects to the database in your connection string.

In our case, we’re going to deploy objects to our BimlExtract database.

05_Packages.biml

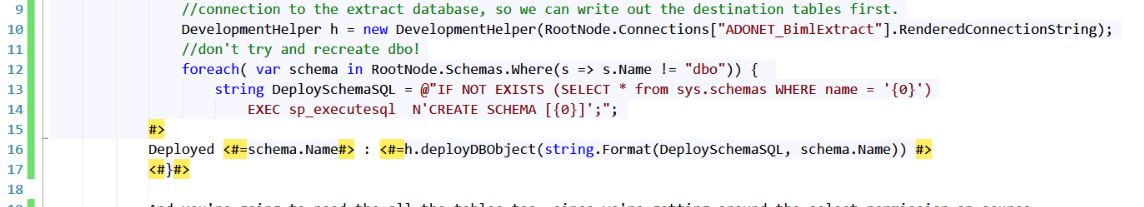

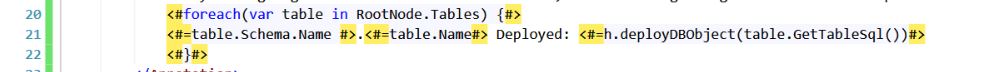

Everything to this point was just to enable this script. Before you can write a package, you have to have access to SELECT from the source and destination table. So on line 11, I instantiate a copy of my DevelopmentHelper class with a connection to ADONET_BimlExtract. Then, I want to make sure I have every schema in my solution in BimlExtract.

Usually, I’d only deploy my Extract Schema to my destination database. That assumes I can SELECT from my source tables. Since I can’t, I’m also going to spin up a copy of the source objects in my destination database. This is how I get around the permissions issue!

Then I use the same technique to deploy my source and destination tables in BimlExtract.

I wrap this all in an Annotation node, so you can see the table deployments happening. As each object is deployed you get a “true” for success and a “false” for a failure.

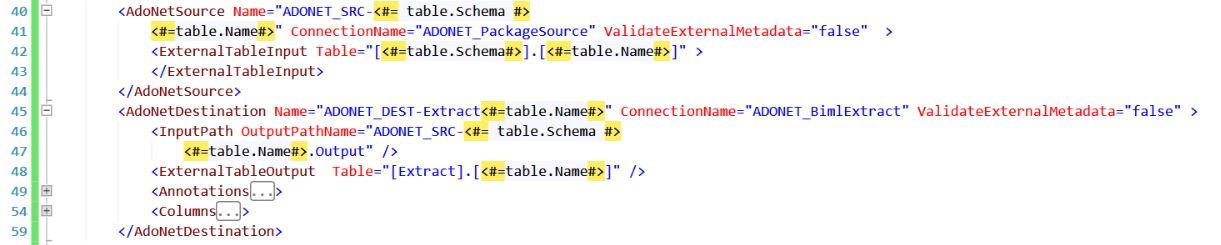

Starting on line 27, we start building our packages. I want to build one package for each Extract table. For this demo, I’m building a simple Truncate and Load pattern. My truncate statement is run against ADONET_BimlExtract, as you’d always expect (lines 31-33).

Then, in the data flow (lines 34-62), Notice I’m using the ADONET_PackageSource connection for my source. Remember this connection refers to BimlExpress, not AdventureWorks2014. I’m using ADONET_BimlExtract connection for my destination.

For each package we build, I add two connections, one for ADONET_BimlExtract and ADONET_PackageSource. Now, at run time, I don’t want to read from the copy of the source table, I want to read from the real source. To do that, I create an expression to change the database on my ADONET_PackageSource connection.

Now, you have a way to change this back to the real source database at run time!

Conclusion

Sometimes you have to get creative to solve a problem. This was just my first pass at solving the problem. There may be better ways to solve it. If there are, please let me know!

If you’re concerned with leaving a lot of objects behind in your destination database that you won’t need later, you can always set up a follow-up step in your build process to clean them up.

This solution could also apply in cases where you cannot access the source server due to development offline.