Over the next few blog posts in this series, I’m going to share with you the story of how a Data Lake project comes together. As I tell this story, I’m going to keep pointing back to traditional ETL work and to Automation techniques. Not all of these will include Biml. I hope to help you all see this as simply a next step in our jobs as data platform people.

Greenfield Project

This data lake project is a greenfield project. There was no prior data warehouse before. In fact, there was no prior business before this project. This project was conceived as a new way of tackling a social problem in our society. There are many non-profit and government agencies doing their best to address this issue with the tools they have. This project began as a simple question, “Is there any cooperation between these agencies?” The answer was: “Not really.”

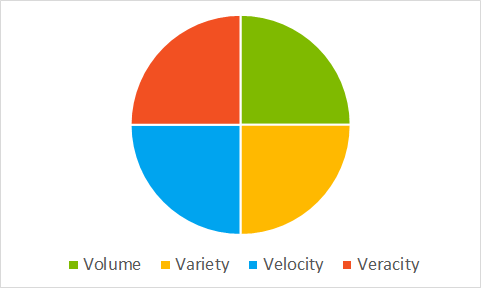

One of the organizing members suggested sharing research and operational data in such a way that all agencies could learn from successes and failures collectively, rather than individually. The challenge was, all these agencies collect their data in very different ways. They absolutely had a variety problem. They also had a possible Veracity problem.

After the green-fielding was complete, I connected with this group to help them see this vision come to life. We talked through several technologies that exist in the Microsoft stack, and how each may or may not help them get to their end goal of combining and collating data to help solve a social problem. We talked through Azure Active Directory to help control access to sensitive data. We talked through Azure Data Lake Storage and Analytics. We talked about HDInsight, Machine Learning, R Services, PowerBI. We even discussed non-Microsoft tools like Tableau and Qlik.

At the end of this conversation, I started building a project plan to help them move from that green field to a functional solution.This plan began with laying out the zones of their data lake.

Azure Data Lake Zone Layout

As much as I’m going to show how similar data lake work is like your traditional data warehousing, I do have to point out some differences. In data warehousing, once we start staging, transforming and loading data, we’re talking about tables in databases. In data lakes, we don’t have tables, we have folders of files. Groups of folders and files with a specific purpose are grouped together into zones. The three most common zones are equivalent to three sets of tables in data warehousing.

Raw Zone

Our first zone is the raw zone. This zone will serve as the landing point for source files. Like the extract (or stage) schema in our data warehouse, we want these files to match the source system as close as possible.In the data lake, we actually go one step beyond saying we want the schema of our raw files to match the source system, we also want these files to be immutable.

Immutable means once they are written to the raw folder we shouldn’t be able to modify or delete them. That way, we can always reconstruct different states from these files without having to retrieve them from the source system.

In this system, we set up a folder under the root of our data lake and named it Raw. Under that folder, we create one folder for each tenant agency that will be sharing data with us. The reason we set up these children folders is we need to be able to secure the incoming data in a way no tenant can read the raw files until it has been sterilized of any Personally Identifiable Information (PII). We also want to prevent tenant B from directly seeing tenant A’s raw data, only the standardized data.

To make files easier to identify, we add a new folder under each Tenant’s Raw folder for each file type they contribute. So if Tenant A had three types of files: one for household data, one for school system data, and one for medical visits there would be three folders under their tenant folder.

\Raw

\TenantA

\Household

\SchoolSystem

\MedicalVisit

Currently, we are discussing whether or not to include additional children folders under each file type folder to provide date partitioning. So under each filetype folder, you would find a year folder in “yyyy” format. Each yearly folder would include twelve monthly folders in the “mm” format. And each monthly folder would have a folder for each day that data is provided.

Staged Zone

To allow tenants to only see sterilized versions of the files they contribute to the data lake a second zone was needed. This zone would be the first place a tenant could see it’s own files in a sterilized format. That way, no one could get PII information through the data lake. The plan is to build a set of functions in U-SQL or Azure Data Factory to process new files from the Raw to the Staged Zone.

The Staged folder would match the folder structure of its source raw folder. That way you can easily see where the staged file came from. So, looking at folder structure in the Raw example you would see the following structure in the staged folder:

\Staged

\TenantA

\Household

\SchoolSystem

\MedicalVisit

Development Zone

To allow the data engineers a place to test out new features for the data, we created a development folder beside the raw and staged zones’ folders. The security for this folder would be set up so that only developers and specific test users could access data here. Once the new development data were approved for production use, that data could be created in the production zone.

In the development zone, we move away from a folder for each tenant. From this point forward, users would be using the data to spot patterns and insights across data sets. Take the example from before. Tenant A is providing school system data. Come to find out, 6 other tenants provide this data too. Developers decide to integrate these six separate sources into one unified data set. They would store this data in a structure like this:

\Development

\SchoolSystem

Production Zone

When development data gets approved the process to build the development data is repeated, except the output would be written to the production folders. This structure would exactly match Development. If the data volume in development grew to require multiple files, and the number of files grew, then these folders could also get date partitioned children folders in the yyyy\mm\dd format.

Zone Architecture

Don’t let this article convince you to only set up four zones in your data lake. There could be valid reasons to set up additional zones. I’ve seen other architectures set up zones for temporary data. That way files that only needed to stick around for a few hours had a special home. I’ve seen zones set up to serve specific client tools. For example, one set of files set up for consumption by Tableau, and others set up for sending back onto a client’s premises. The zones should match the logical divisions in your business data.

Also, keep in mind that date partitioning may not work in all cases. If your business cycle is like nation-wide pizza delivery companies, you may do more than 50% of your business on a single day of the year. For that one day each year you would find incredible amounts of data compared to the rest of the files. In that case, the amount of time to process that one file would create bottlenecks when processing a year’s worth of data. If you are wise, you’ll profile your source data and pick a distribution key that makes sense for your data.

You can always change your distribution key later if you simply don’t know what your data will be. That’s the case we’re in with this greenfield project. Just be prepared for the statistics to show that you need to re-arrange your data within your data lake to provide greater performance and lower costs of ownership.

Next Time…

In my next few Azure Data Lake articles, I’ll start sharing some of the questions and answers from this project. I’m finding that the documentation for Azure data lakes is very good, but sometimes you have to read three or four articles, and then synthesize your answers. I’d like to save you some time and share some of that research with you. After adding those articles, I’ll start showing you some U-SQL built by hand, and then show you how to automate that! Stay tuned!