Moving to U-SQL for your ETL can feel like a step back from the drag and drop functionality we have in SSIS. But there is one great thing about your ETL being defined in text rather than a UI: you can automate it! Today I’m going to show you how you can automate the part of your script where you establish your schema on read.

File Interrogator

Currently, U-SQL doesn’t support a way to dynamically define the columns at runtime. The only option we have is to script our script. That makes this very similar to what I do in Biml. I create a Biml script that defines what I want my SSIS packages to look like, and then generate the packages. The secret to generating packages based on a file is my File Interrogator. This class reads a delimited file, and determines the schema! If you’d like to follow along with my demo, you can get the code from GitHub here.

My solution assumes you have the file on your local machine. To update this to use files from your Data Lake Store, you’d have to add a reference to the appropriate assemblies.

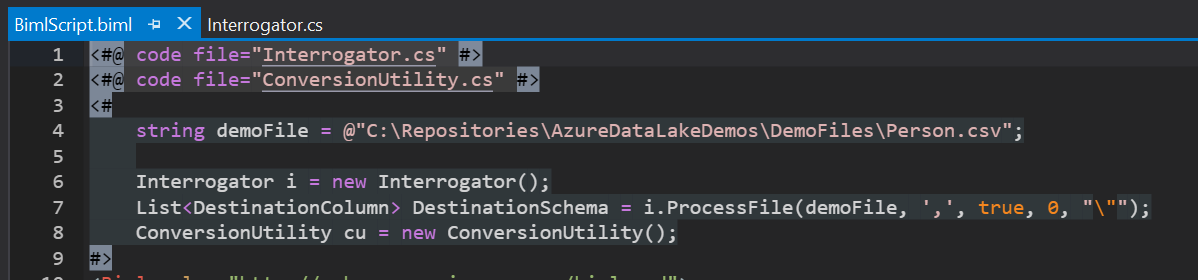

What we do is we instantiate an Interrogator and then use it to read the file in and scan it for column metadata. In this case, I’ve told the interrogator the file is comma delimited, has headers, and can contain quoted text. I also instantiate a copy of my ConversionUtility, so we can output data types in c# format.

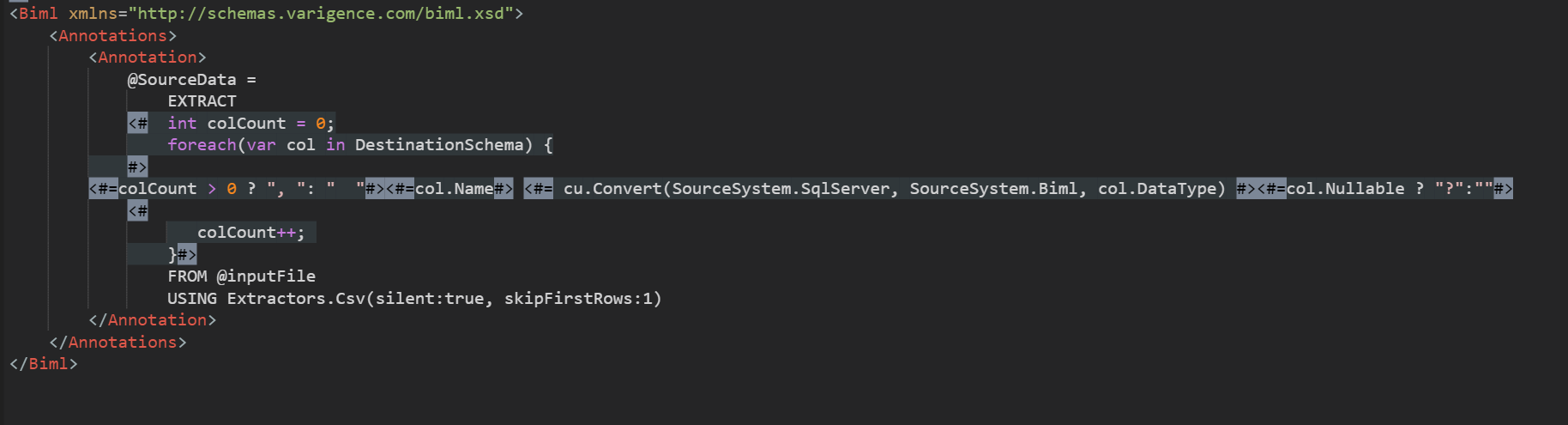

Right now, Biml doesn’t directly support writing a U-SQL script, so I’m writing out my script via the Annotations node. In this portion of the script I want to stub out my Extract statement. The logic is pretty simple, for each column I find in the source file I want to read it out with the best fit datatype. If this is the first column I won’t add a comma to the script, and if it is, I do.

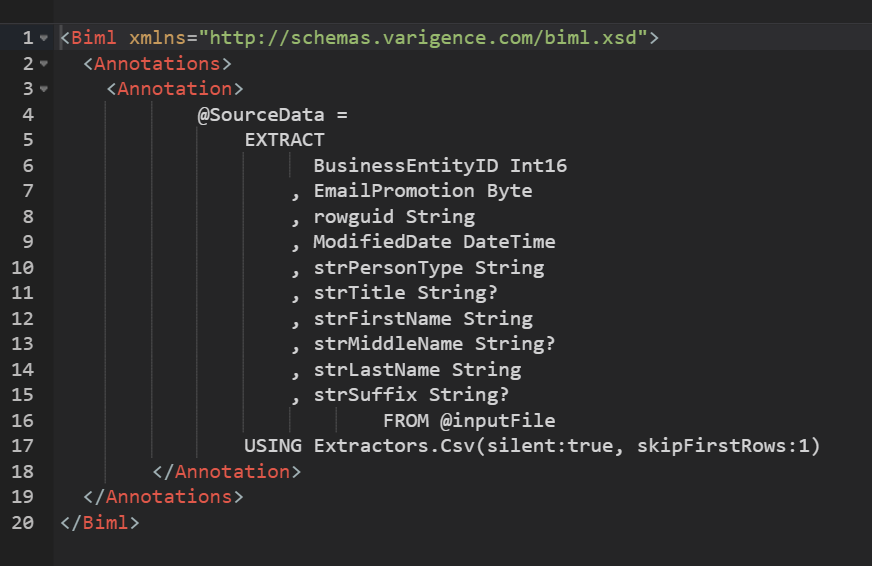

I also use my ConversionUtility.Convert function to convert from SqlServer datatypes (the default for my Interrogator) to Biml data types, which just happened to be c# data types! Also, if the file has nulls in a certain column, I set that column to be nullable in my U-SQL script too (that’s what that question mark gives us). So when we run this simple script against a CSV of the Person.Person table from AdventureWorks, we get the following output:

So now, we could take this output, and put it into a U-SQL file, and we have half of our ETL processing script complete! Next time, we’ll work through how we could generate transforms automatically from metadata. In the meantime, if you have questions, send them in. I’m here to help!