Let’s continue the series on getting rid of duplicate data. Today we’re going to use self joins to get rid of them. A self join is a join from a table back to itself. It might seem weird to do that, but by the end of this article you’ll see how it’s useful. I use self joins to de-dupe when the table has a primary key, yet that primary key didn’t prevent duplicates from being inserted. Let’s set up a demo table now.

CREATE TABLE TroubledTableWithPK ( ttPrimaryKey INT IDENTITY(1,1) , FirstName VARCHAR(50) , LastName VARCHAR(50) , email VARCHAR(255) )

Now, let’s copy some data from the backup table we created before.

INSERT INTO TroubledTableWithPK (FirstName, LastName, email) SELECT * from OriginalTroubledTable

Take a look at the duplicates in that table.

SELECT email, COUNT(*) FROM TroubledTableWithPK GROUP BY email HAVING COUNT(*) > 1

Yeah, we have duplicates! Take a closer look at one of these duplicates and you can clearly see how the primary key, which was supposed to uniquely identify the row to the table, didn’t prevent duplicate email addresses from being added.

SELECT * FROM TroubledTableWithPK WHERE email ='elit.a.feugiat@Etiam.com'

Let’s propose a way to remove all the duplicate records that aren’t the “last” copy of the duplicate data. The last copy would be the record with the highest ttPrimaryKey Value.

SELECT email, MAX(ttPrimaryKey) FROM TroubledTableWithPK WHERE email ='elit.a.feugiat@Etiam.com' GROUP BY email

If we were to join TroubledTableWithPK to a sub select of TroubledTableWithPK, we could show all those records we want to remove.

SELECT tt.* FROM TroubledTableWithPK tt INNER JOIN ( SELECT email, MAX(ttPrimaryKey) AS maxTTPrimaryKey FROM TroubledTableWithPK GROUP BY email) maxvals ON tt.email = maxvals.email AND tt.ttPrimaryKey != maxvals.maxTTPrimaryKey WHERE tt.email ='elit.a.feugiat@Etiam.com'

Compare these results with the SELECT * statement we ran. By joining on email, and where the ttPrimaryKey != MAX(ttPrimaryKey) shows us the “early” duplicates in the table. Now all we have to do is cahnge the SELECT to a DELETE statement.

DELETE FROM tt FROM TroubledTableWithPK tt INNER JOIN ( SELECT email, MAX(ttPrimaryKey) AS maxTTPrimaryKey FROM TroubledTableWithPK GROUP BY email) maxvals ON tt.email = maxvals.email AND tt.ttPrimaryKey != maxvals.maxTTPrimaryKey WHERE tt.email ='elit.a.feugiat@Etiam.com'

So now when we run the SELECT *, no more duplicates!

SELECT * FROM TroubledTableWithPK WHERE email ='elit.a.feugiat@Etiam.com'

So all we have to do to delete all the duplicates, not just the ones for Elit Feugiat, just remove the where clause.

DELETE FROM tt FROM TroubledTableWithPK tt INNER JOIN ( SELECT email, MAX(ttPrimaryKey) as maxTTPrimaryKey FROM TroubledTableWithPK GROUP BY email) maxvals ON tt.email = maxvals.email AND tt.ttPrimaryKey != maxvals.maxTTPrimaryKey

And All our duplicates not-so-magically disappear!

SELECT email, COUNT(*) FROM TroubledTableWithPK GROUP BY email HAVING COUNT(*) > 1

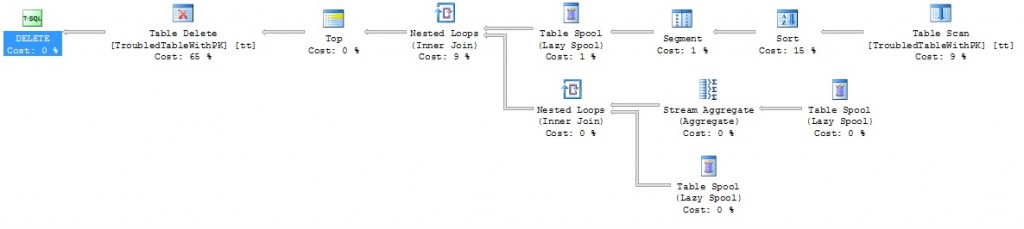

Now, I would like to say, you might want to check out the execution plan of this method. You could run into a situation where the table scan, the implicit order of the subquery and nested loops of the inner join to the sub table could give you troubles if you had a large number of records in TroubledTableWithPK. These operations could cause you some blocking issues if any other process was trying to read records from this table during the delete.

I’d also like to point out, if you’re deleting a large number of records, you could also find your transaction log filling up.

Deletes are going to cost you more than a truncate if you inserted the distinct records to a temp table, then truncated the table. It’s even more than selecting the distinct into a new table, then renaming the new table to the old name.

Now you have another tool available when you’re faced with duplicate data. Just one more method to go in this series: CTEs. Don’t worry, they’re not as hard as you might think. Until then, if you have any questions, let me know. I’m here to help!