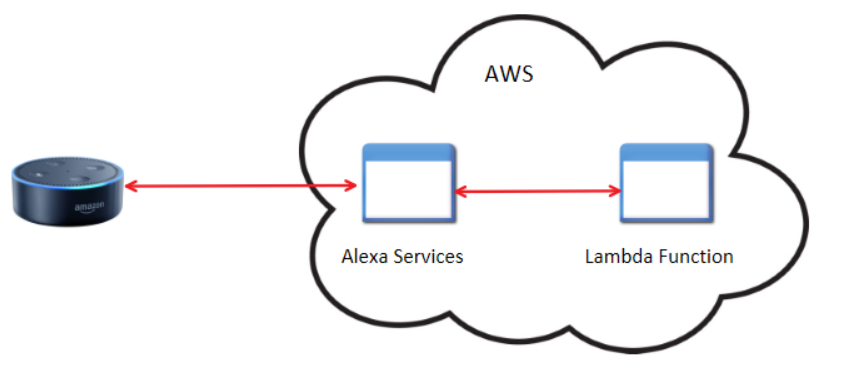

With an understanding of the Alexa ecosystem and some of the terminology I’ll be using, let’s look under the hood a bit today. All Alexa devices depend on the cloud to do the heavy lifting. In the most simplified case, this is what’s under the hood of a simple Alexa skill.

You speak to your Alexa enabled device, in this case, a dot. “Alexa, ask Invader Zim for a quote.” The device wakes up on the word “Alexa” and then sends your request “ask Invader Zim for a quote” to Alexa Voice Service. Your request is determined to refer to my skill Invader Zim Quotes. Based on my skill’s configuration and the custom interaction model, this utterance triggers the GetQuoteIntent. This full request is passed to my AWS Lambda function AlexaInvaderZim.

This function is a simple c# program that loads up a List of quotes and picks one at random. It adds that quote to an object that represents a well-formed Alxea response, and ships it back through the Alexa services to your dot. You then hear one of the quotes from the Invader Zim show.

What About Azure?

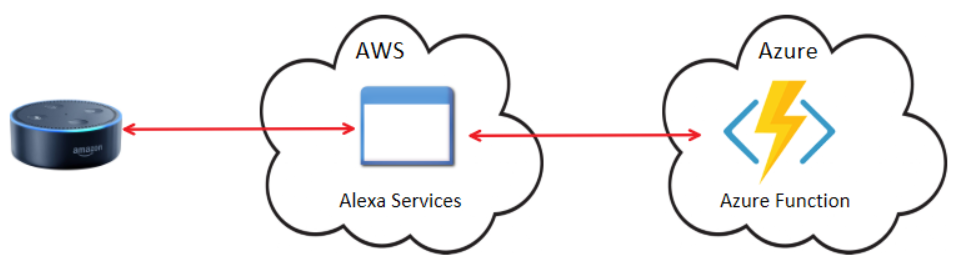

I’m heavily invested in Microsoft Azure. So, doing as much as I can there makes me more effective. The only cost is you’re hopping back and forth between two clouds, and in web development, you have to be hypersensitive to latency.

Using the same scenario as before, saying “Alexa, ask Invader Zim for a quote” follows the same path to AWS through to Alexa Voice Service. The difference here is, rather than directing your request to a Lambda function, we’re directed to an endpoint in Azure that’s answered by an Azure Function. Funny enough, the code in the Azure function is nearly identical. This endpoint returns a well-formed Alexa response and sends that back through AWS to your dot. In the end, you sill get a random quote from the Invader Zim show.

Let’s get Interesting

Now, you could do any of the following with AWS, but you’ll need to refer to Amazon documentation for details. Let’s say rather than Invader Zim quotes, we were dealing with a lot more source data. Keeping that all in the Azure function would be costly to do. Keeping it in external storage would be a better choice. But which storage do we want?

It depends.

We could stuff it in blob storage. It’s cheap, reliable, and relatively fast when you choose SSD backed storage.

We could go with old faithful, SQL Database.

We could go cutting edge and choose Cosmos DB.

We could go cutting edge and choose Cosmos DB.

That’s the best thing about Azure, so many choices in how to accomplish your goals. Pick a solution, test it and measure to see if it’s the best fit for your project. In my early development for the Invader Zim Quotes skill, I backed the storage with Blob Storage and things worked well enough.

You all know I’m a data guy, so I wanted to start pulling usage data out of my skill. I wanted to learn more about how people interacted with skills.

So I needed a place to start writing telemetry data. Fortunately, the volume and velocity of that data aren’t high enough I need to go Event Hubs. I was on the fence if I wanted to start writing this to Blob Storage or Azure Data Lake Storage (ADLS). In the end, I wanted to use U-SQL to analyze my telemetry data, since it’s effective in dealing with JSON data. So I ended up writing my telemetry data to ADLS.

Once I had my telemetry storage in place, I wanted to start visualizing the data, so I stood up a workspace on my PowerBI instance in order to explore the data further. Eventually, this exploration will show me new utterances, or additional features I’ll wish to add to my skill. U-SQL can then churn out new configuration files I can deploy to my skill registration in AWS closing the loop between gathering data and acting on it.

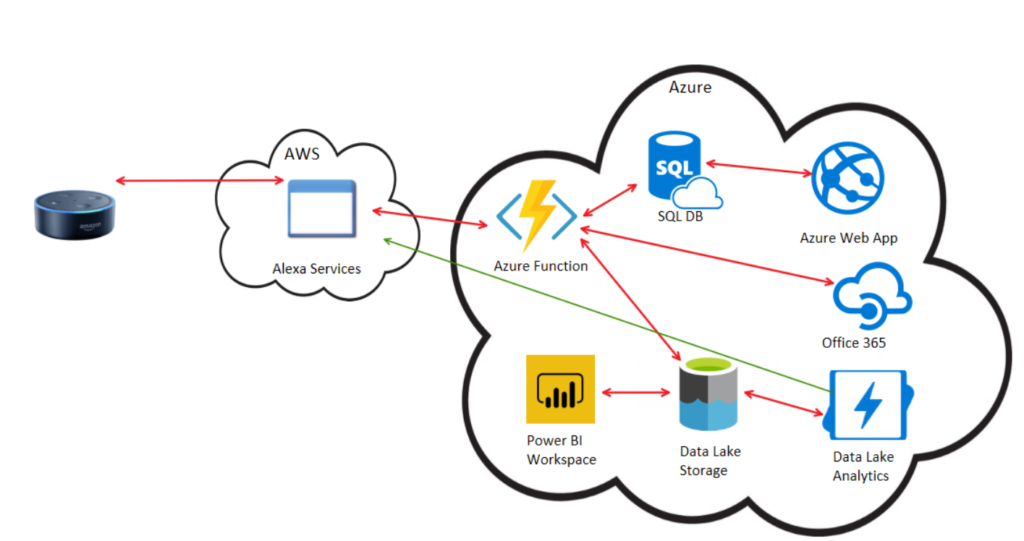

Receptionist Infrastructure

So bringing all this together into one diagram, let’s consider a new Alexa skill a virtual receptionist. This skill would be used from an Alexa based device placed at the front desk or lobby at your company. Users would arrive, and then check in via this device. The Alexa skill would take your name and the name of whom you wish to see, along with time and location, to contact the person you’re meeting, and offer a few other pleasant features. The infrastructure for this solution is far more advanced. This is the infrastructure we’ll build out in the remaining blog entries in this series.

We start this diagram on the left with our Dot. “Alexa, tell the receptionist Test User is here to see Shannon Lowder.” This request is sent over to AWS, like all skills requests to route the request to the appropriate endpoint.

I’d like to let you know, one of the things an Alexa enabled device can do (if you grant the permission) is pass along the address at which the device resides. This address and the device’s unique identifier is passed along with the request to our Azure Function. This function takes the device ID to lookup the configuration information in an Azure SQL Database.

This Database is configured by an Azure Web App we will build to set these configuration settings. In order for the receptionist to access company calendars, we have to grant this azure function permissions to read calendars in our Office 365 environment. The easiest way to do this is a website that you can log in to both the Receptionist app, as well as Office 365. Other configuration values that you can set in this app would be hours of operation, office addresses, and Alexa devices allowed to interact with this system.

When the Azure Function locates the correct configuration for the given user request, it then uses the name the visitor is here to see to perform a lookup in Office 365 for their contact information and calendar. That information is returned to the Azure function.

The contact information in Office 365 is used to alert the employee that their guest has arrived. This could be an email, IM, or phone call. Yes, Alexa now supports calling! The calendar information can be used to determine which method is best for the employee. If they are currently in a meeting, then the notification may be an IM or email message. If the employee shows available, then the device the visitor used could be connected to the employee’s phone, and a conversation between the two could begin.

Now at each of these steps, telemetry data can be collected. Each request from the visitor’s dot can be recorded and logged to Data Lake storage. The reason I’d want to collect this is I don’t know every variation of how users will speak to the dot. If I collect this information, I may learn new utterances, or even whole new intents I wish to enable for the skill. I also want to know how many successful, and how many failed attempts are made. This can help me devise new prompts or interactions within my skill.

How will I spot patterns in this raw data? I’ll first use Data Lake Analytics to collate the requests and explore the raw data. As I find interesting groups of data, I can kick those over to PowerBI to explore further. When I do find a new Intent or utterance, I can craft this change manually. My preference would be to allow these changes to be created automatically, and sent over to AWS for additional testing. That’s what the green line represents, automation and ultimately machine learning in this process.

Now that we have the infrastructure, we’re going to start designing our Receptionist skill. This starts with a mock dialog and then moves into a flow chart of sorts. The last step is to build out an interaction model and backend service to support it. Join me next time, when we’ll get right to it! In the meantime, if you have any questions, please let me know. I’m here to help!