Spark engines like Databricks are optimized for dealing with many small-ish files that have already been loaded into your Hadoop-compatible file system. If you want to process data from external sources, you’ll want to extract that data into files and store those in your Azure Data Lake Storage (ADLS) account attached to your Databricks Workspace….

Category: Data Engineering

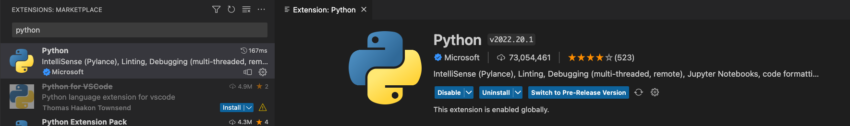

Prepare VSC Local Databricks Development

Last time, we walked through how to perform analysis on Databricks using Visual Studio Code (VSC). This time, we will set up a local solution in VSC that will let us build out our data engineering solutions locally. That way, we don’t have to pay for development and testing time. We’d only pay for Databricks,…

Data Engineering for Databricks

Since Databricks is a PaaS option for Spark and Spark is optimized to work on many small files, you might find it odd that you have to get your sources into a file format before you see Databricks shine. The good news is Databricks has partnered with several different data ingestion solutions to ease loading…

Databricks for SQL Professionals

I’ve been a Microsoft Data professional for over 20 years. Most of that time I’ve spent in the SQL Server stack, the core query engine, SSIS, SSRS, and a little SSAS. But times changed, and the business problems grew more complex. As they did, I looked at other technologies to try and answer those questions….

Notebooks Explore Data

On a recent engagement, I was asked to provide best practices. I realized that many of the best practices hadn’t been collected here, so it’s time I fix that. The client was early in their journey of adopting Databricks as their data engine, and a lot of the development they were doing was free-form. They…

The Big Cost in Data Science

You hear time and time again how 50 to 80 Percent of Data science projects is spent on data wrangling munging and transformation of raw data into something usable. For me personally. I’ve automated a lot of those steps. I built tools over the last 20 years that help me do more in less time….

NOAA Radar and Severe Weather Data Inventory

After I finished evaluating the Storm Events Database from NOAA, I was convinced we needed to look for machine recorded events. When you start poking around the NOAA site looking for radar data, you’ll find a lot of information about how they record this data in binary block format. Within this data, you’ll find measurements…

Data Quaity Issues

This entry picks up the story behind my first data science project predicting hail damage to farms. In this article we identify data quality issues in our first data source. Property and Crop Damage In the NOAA documentation these two columns were recorded to say how much property and crop damage occurred in a given…

Metadata Model Update

As I began learning Biml, I developed my original metadata model to help automate as much of my BI development as I could. This model still works today, but as I work with more file based solutions in Azure Data Lakes, and some “Big Data” solutions, I’m discovering it’s limitations. Today I’d like to talk…

Data Warehouse Efficiency

How quickly do you get from the business coming to you with “we need a data warehouse” to delivering that warehouse? If you’ve sat through any Biml talk, you’ve undoubtedly heard stories of thousands of staging packages being generated per hour. You may have even heard tales of source systems being analyzed in hours, rather than…