If you work with CSV files, you know how important it is to have a clear and consistent schema for your data. A schema defines your data’s structure, format, and types and helps you validate, transform, and analyze it. The problem is CSV files have no INFORMATION_SCHEMA or similar metadata store you can query to…

Tag: Python

Metadata-Driven Python

You will manually build your ingestion code when you first learn to ingest data into any new engine. This makes sense; you’re just getting started. You want to learn how the engine will read the data and then write it back again. You want to learn how to log what’s happening during ingestion. You want…

Testing Ingest

Last time, we built a simple transform function in Python, but how do we know if it works? We need to build some tests to find out. I admit data engineering has be late to the practice of test development, but it’s not too hard to adopt. Let’s work through a simple data test, a…

Developing Datbricks Ingestion locally

Spark engines like Databricks are optimized for dealing with many small-ish files that have already been loaded into your Hadoop-compatible file system. If you want to process data from external sources, you’ll want to extract that data into files and store those in your Azure Data Lake Storage (ADLS) account attached to your Databricks Workspace….

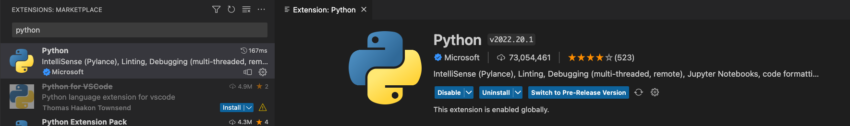

Prepare VSC Local Databricks Development

Last time, we walked through how to perform analysis on Databricks using Visual Studio Code (VSC). This time, we will set up a local solution in VSC that will let us build out our data engineering solutions locally. That way, we don’t have to pay for development and testing time. We’d only pay for Databricks,…