When we start developing in a new language or on a new platform, it’s easy to fall into the trap of monolithic design. The free flow from idea to code leads to a single blob of functional code. This leads to quick prototype code that meets the functional requirements. The problem with this approach creeps…

Author: slowder

Testing Ingest

Last time, we built a simple transform function in Python, but how do we know if it works? We need to build some tests to find out. I admit data engineering has be late to the practice of test development, but it’s not too hard to adopt. Let’s work through a simple data test, a…

Developing Datbricks Ingestion locally

Spark engines like Databricks are optimized for dealing with many small-ish files that have already been loaded into your Hadoop-compatible file system. If you want to process data from external sources, you’ll want to extract that data into files and store those in your Azure Data Lake Storage (ADLS) account attached to your Databricks Workspace….

Prepare VSC Local Databricks Development

Last time, we walked through how to perform analysis on Databricks using Visual Studio Code (VSC). This time, we will set up a local solution in VSC that will let us build out our data engineering solutions locally. That way, we don’t have to pay for development and testing time. We’d only pay for Databricks,…

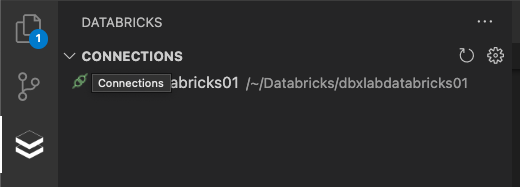

Get Started with Databricks in VSCode

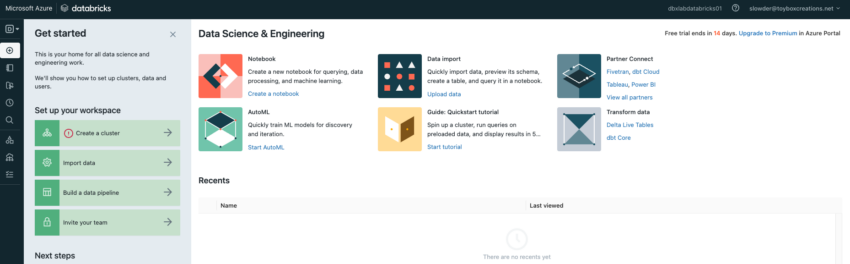

You’ve just received a new dataset, and you have to analyze it to prepare for building out the d ta ingestion pipeline. But first, we’ll need to create a cluster to run our analysis. Let’s run through a simple data analysis exercise using Databricks and Visual Studio Code (VSC). Create a cluster from the Web…

Connecting Visual Studio Code to Databricks

After you have your Databricks workspace, it’s time to set up your IDE. Head over to https://code.visualstudio.com/ to download the version for your operating system. It’s available for Windows, Mac, or Linux. During my most recent Databricks presentation, I was asked to point out that Visual Studio Code (VSC) is separate from Visual Studio. It…

Provisioning Databricks

Now that you’ve had an introduction let’s get started exploring Databricks. Head to https://community.cloud.databricks.com and click the sign up link at the bottom. The community edition is a completely free option. Fill in your contact information. It may help to use a ‘+’ email address to sign up; that way, you can later sign up…

Reporting and Dashboarding in Databricks

As a SQL Server user moving into Databricks, reporting and dashboarding can be a more manageable learning task. It all begins by changing to the SQL Persona in Databricks. Click the persona icon from the upper left menu, and choose SQL. Once in the SQL persona, you can begin querying any data your workspace has…

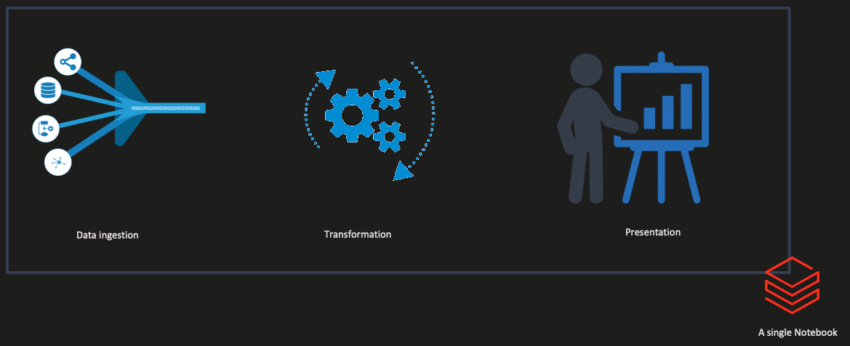

Data Engineering for Databricks

Since Databricks is a PaaS option for Spark and Spark is optimized to work on many small files, you might find it odd that you have to get your sources into a file format before you see Databricks shine. The good news is Databricks has partnered with several different data ingestion solutions to ease loading…

High-level Databricks Compute

Last time, I compared SQL Server storage to Databricks storage. This time, let’s compare SQL Server compute to Databricks compute. How SQL Server Processes a Query When you submit your workload to SQL Server, the engine will first parse it to ensure it’s syntactically correct. If it’s not, then it fails and returns right away….